Layered perspectives: How 'duality' shapes London Design Festival's graphic identity

by Aarthi MohanSep 12, 2024

•make your fridays matter with a well-read weekend

by Anmol AhujaPublished on : Oct 13, 2023

Of all the multifarious directions that conversations on the use of artificial intelligence and what it means for us as a collective have gone in (and could go in), the presence of a human tether to bring these findings and musings home has been imperative. That tether, placed around the fundamental question of what separates a ‘living’ being from a machine, an abstract sliver of difference between an emotional and artificial intelligence, between ‘us’ and algorithmically-driven processes of machine learning, is wholly essential as a prerogative to drive conversations such as the ones at Technology and Design Lab at this year’s London Design Festival. That, while also bearing the crucial, enduring modicum of reassurance that allows us to head home and proceed with our near-simulatory daily lives. A large majority of us still remain heedless of the ways in which AI and automated machine learning processes have already upended—or accelerated—the aspect of functionality in our lives. The future, indubitably, holds a much more intrinsic interweaving of the ‘human’ and the ‘artificial’. While we may not fully blur these boundaries, the very definition of what those boundaries enclose and their relative positions, are bound to be radically redefined.

That tether, admittedly so, is tinged by an aspect of overwhelming fuelled by AI’s recent, accelerated proliferation through more public means, including the likes of OpenAI generative text platform ChatGPT and Google’s Bard, as well as AI-powered text to image generators Midjourney, Dall.E, and Adobe’s Firefly. A faction of us definitively veers to lament over this unforeseen and rapid-than-anticipated proliferation as well. If a major demand tabled in the recent SAG-AFTRA strikes—“protection against AI”—is any indication, the democratic aspect of these mostly free-to-use platforms is shadowed by creator concerns. The act of artistic creation, much like the human-machine dichotomy discussed above, is placed in a position of direct opposition with generative, especially prompt-based AI. Even contemporary meme culture and advertising aren’t bereft of the deluge of AI-generated text, imagery, and sounds. This, however, remains a myopic understanding of the possibilities that AI offers—as a tool of augmentation and analytical prediction, a supplement to the creative process—more than anything else at present.

Understandably, uncertainty for the future persists in this realm among us humans; however singular or collective that term may be for you. We grapple with the sheer mass of possibilities that AI and machine learning have already offered in a number of ways, along with an entire unexplored realm of possibilities still beckoning and anticipating our catching-up. It is like peeking through the rift; the possibilities are surely exciting, but the magnitude of what lies at the other end, whose singular glimpse has been both life-altering for an entire civilisation and then life-affirming for some, has to be intimidating for the scientific temperament laden with an unshakeable sense of history. For if history lends any evidence, the human-AI alliance was indeed never going to be a smooth one, especially because it involves what may be termed a creator complex on one hand, and the perceived subservience (along with the important and vastly interesting question of its autonomy) on another. Which is which, is a wholly different matter and fodder for the realm of science fiction.

In its sophomore year, the second edition of Technology and Design Lab by open-ended design and LabKO, and co-curated and hosted by Suhair Khan, pivots along these essential questions but through peripheral means, instead questioning the ramifications of that proliferation as a means to examine the core problem. Set in The House of KOKO near the counter-cultural haven of Camden, the conversation refreshingly starts from a point where the question of “whether AI” is suitably bypassed in favour of a coming-to-terms with and looking to the future, building upon themes it addressed last year with evidently more flavour, and definitely more urgency. Its key strength, recurrent from its inaugural edition, lies in the diversity and cross-disciplinarity of its speakers, and the varied interests with a common anchorage veered towards human-centric design that they represent at the Technology and Design Lab 2023. The very nature of the nearly four-hour-long programming begets that diversity, bearing testament to two rather important facets of any discussions around AI. The first, quite simply, has to do with the number of spheres AI and machine learning penetrate in our daily being; the second is a testament to the essentiality of collaboration and collectives that transcend disciplinary linearity in dealing with the anxieties and fears associated with technology. Divided into four major ‘acts’, the series of talks began with a performance by English musician and new media artist Harry Yeff along with a conversation between Yeff and Suhair, followed by a more plural bucketing of speakers under the relevant themes of ‘Creativity and Intelligence’, ‘Ecology and AI’, and ‘Responsible Tech’, pitting the essential AI question. This was all following a pensive introduction to the event by both Khan and Ben Evans CBE, director of the London Design Festival.

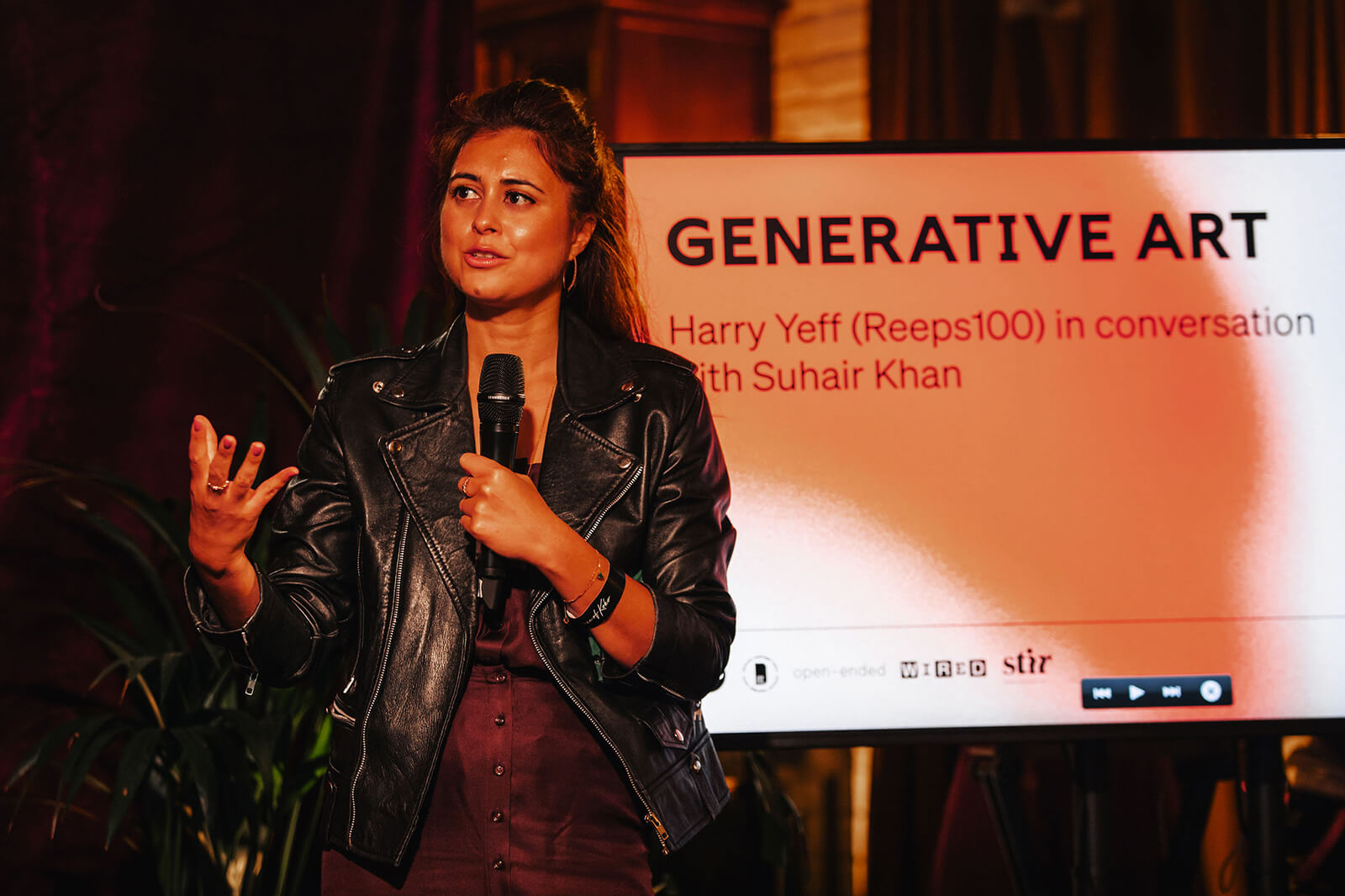

Harry Yeff in conversation with Suhair Khan

Better known by his stage name Reeps100, Yeff is a London-born neuro-divergent artist and musician-technologist with a distinct focus on voice, AI, and tech-based performance art. Yeff’s singular voice on the use of AI as an essential augment—a chief term here—to his craft was resounding and affirmative on several fronts. For Yeff, his relation with AI in the context of his work navigated questions of “narrative, zeitgeist, aptitude, and values”, while still maintaining truth and authenticity even in the face of questions of trust around creative control. Yeff highlighted the importance of AI and a machine as a necessary oppositional force—a novel perspective by all means. “To have a system take your expertise and fight you back with it,” Yeff propounds, was both exhilarating for him and presented a unique set of challenges that have only allowed him to hone his craft, along with speaking to the impressive state of AI as being in a constant state of metamorphosis through learning from large, intangible data sets. Speaking to a deviation from the norm being a way of life for him owing to his neurodivergence, and a kinship Yeff had always felt with non-linear thinking, he described one of his experimental tunes as “me and not me at the same time”—a reckoning whose fascination and newness weren’t underscored by the uncanny in it. Yeff’s closing question in his conversation with Khan—“Who owns intelligence? Where does it come from?”, further begs a critical re-examination of hegemonic structures reinforced around creative work and art and the relevant fear of gatekeeping garbed as creator protection and resistance to change.

Marcus du Sautoy, Rebecca Fiebrink, and Ben Cullen Williams, moderated by Suhair Khan

Tiptoeing around notions of what it meant to be a creative professional in the age of artificial intelligence, and what it implied for their own individual practices and research, the three speakers had radically different views and uses of AI and what proportions creativity assumed in the presence of a tool like AI. Yet still, they seemed to find consonance in the relative minimisation of a plethora of data into tangibilities as, again, augmenting their practices, as opposed to being it. Ben Cullen Williams, who is a multidisciplinary artist, for instance, quoted the use of AI as rather indispensable in the iterative process of his art. Williams was firm on the ability of the human eye to spot an aberration and an iteration that sticks after hundreds of do-overs, with AI assisting in bringing together those iterations, but in it being far-removed from the end product. Marcus du Sautoy, who is a Mathematics professor at Oxford University and a best-selling author echoed that sentiment, but stayed imperative on the importance of the human eye in spotting the logic behind data patterns. Recounting a particular instance from his experiments with machine learning and mathematics wherein AI could sift through heaps of data to point out the presence of a pattern but couldn’t explain that pattern, du Sautoy pressed on the onus of analytical probing still being up to the human. Akin to Yeff’s “augmented intelligence”, he equated the use of AI to Galileo’s fondness for his telescope—at the precipice of an entirely new world, but a tool nonetheless. Not negating the role of AI and machine learning in mathematics especially, wherein a lot of the data is volumetrically dense and temporally vast, du Sautoy closed on a note of anticipation and duality. While most closely rendering the human-machine binomial among other speakers at the conclave, stating how “AI had difficulty in telling stories” owing to its disregard for embodiments as such, he also christened it as “humanity’s best tool to explore the hard question of consciousness.”

On the other hand, Rebecca Fiebrink’s work at UAL’s Creative Computing Institute (CCI) places the human aspect of design at the forefront, examining critical frameworks around decarbonisation and decolonisation through the lens of AI and tech availability to the most vulnerable and disenfranchised groups. Her most recent project focuses on the use of AI to decolonise museum and institutional collections, with a strong focus on contextualising—both socially and politically—the genesis of the creative work, further ensuring the participation of stakeholders and collaborators and their inclusion in feedback loops. “There is a continuum between where AI is right now and where people are, and efforts are to be made to make it (AI) move towards that,” she states in conversation with Suhair.

Chance Coughenour and Seetal Solanki, moderated by Morgan Meeker

Questions of environmental preservation and sustenance do not—and should not—escape most contemporary public programming, and for good reason. In the face of overwhelming new technology, especially, fears of ecology are nothing if not extremely and contextually valid and well-founded. The conversation at the Technology and Design Lab between Seetal Solanki, British academic and founder of her design and material lab Ma-tt-er, and Chance Coughenour, who is Senior Program Manager at Google Arts & Culture, spans the dual scales of their own individual work and in the service of their larger organisations. At Google, Coughenour describes the current way he leverages tech and AI in his current projects, including a free online storytelling platform, digitising collections, visualising climate data, and monitoring the health of coral reefs, with a rather profound mission statement grounded in heritage preservation—"to help humanity become more empathetic towards their neighbours and communities by learning and sharing heritage,” Coughenour states. He sees the more ambitious aspects of his work and its most current application in the realms of ecotourism, preserving digital copies of objects and places, and getting sites endangered by climate change enlisted on UNESCO’s list of world heritage sites.

Solanki, the other half of a spirited conversation, gears the discursive discussion towards language, migration of people and materials, respect and holistic use of materials, and unpacking the “local” along cultural, contextual, and spiritual dimensions through her work at Ma-tt-er. Her mission statement aligns with viewing ‘material’ itself as a driver, conduit, and metaphor. And while language is extremely important to the cultural dimensions of her work, she found the meeting of the two terms—language and artificial intelligence—to be rather obscene, stressing the “how” in the usage of this technology, and its ethical ramifications. “Working together is the dream scenario,” she states, pointing to the very genesis of shared living—to be more accepting of the differences we have—in an ecology.

Ego Obi, Samantha Niblett, and Konstantina Palla, moderated by Suhair Khan

The recent Mark Zuckerberg hearing in front of the US Congress on issues relating to privacy, data mining and sharing, and regulations over his platform Meta brought into limelight what has been at the core of every tech related fear (and ambition)—that of involuntary encroachment, a quasi-sentience, and of exceeding the intelligence it was defined for while placing a people’s essential rights in jeopardy. Such questions loom larger and heavier in the context of AI and its autopoiesis imbued by machine learning. How does one seek to regulate, control, or bind something designed in one’s own image, but programmed to be better and constantly learning, without the fatigue? The e-circulation of deepfake photographs and voiceovers with unreal accuracy has raised these questions on a minuscule scale, but the fearful discovery of the other end of this spectrum remains to be made. The practitioners and professionals on this panel, while recognising the need for regulations, and humanistic ethics, interestingly place a share of the coven of responsibilities in user safeguarding with AI itself. Perhaps the most direct embodiment of this is in Palla’s work as a machine learning researcher at Spotify. While most of her profile revolves around protecting the user and listener from harmful content from being uploaded to the platform, she isn’t remiss of the responsibility question around new tech and AI. “The approach is being proactive,” she states, in “understanding the worries and fears that might appear after the algorithm becomes active. That requires diversity and a lot of education”, stressing the need to maximise data sets for more relevant conclusions and eliminating biases.

Both Niblett, leader of Labour’s Women in Tech Council, and Obi, a senior Google executive with over two decades of experience, were extremely passionate speakers who found cognizance in the asymmetricality of the availability and literacy equation for tech, even in seemingly advanced countries like the UK. Their common advocacy spanned the bridging of politics and policy for data security, moving from the largely binary conversation in AI to diversifying it using political, regulatory, and mediatic spheres, and the inherent biases that tech embodies simply because its designers embody the same. The conversation ended on an introspective note, with the speakers turning the tables on the users as bearing part of the responsibility through extensive feedback loops, engaging in policy discussions, breaking the spread of misinformation, and challenging AI companies on the ethics of data collection.

In closing, I am reminded of Philip K. Dick’s Do Androids Dream of Electric Sheep?, the source of inspiration for one of the most influential human-android, or human-’artificial’ standoffs in science fiction history. The embodiment and even possible personification of artificial intelligence in the face of this debate bears the imaginative hubris of who, or what, the android and electric sheep are, and who, or what, dreams.

You can listen to all four episodes of the discussions in Technology & Design Lab 2023 on open-ended's official Spotify channel.

London Design Festival is back! In its 21st edition, the faceted fair adorns London with installations, exhibitions, and talks from major design districts including Shoreditch Design Triangle, Greenwich Peninsula, Brompton, Design London, Clerkenwell Design Trail, Mayfair, Bankside, King's Cross, and more. Click here to explore STIR’s highlights from the London Design Festival 2023.

(Disclaimer: The views and opinions expressed here are those of the author(s) and do not necessarily reflect the official position of STIR or its Editors.)

by Chahna Tank Oct 15, 2025

Dutch ecological artist-designer and founder of Woven Studio speaks to STIR about the perceived impact of his work in an age of environmental crises and climate change.

by Bansari Paghdar Oct 14, 2025

In his solo show, the American artist and designer showcases handcrafted furniture, lighting and products made from salvaged leather, beeswax and sheepskin.

by Aarthi Mohan Oct 13, 2025

The edition—spotlighting the theme Past. Present. Possible.—hopes to turn the city into a living canvas for collaboration, discovery and reflection.

by Anushka Sharma Oct 11, 2025

The Italian design studio shares insights into their hybrid gallery-workshop, their fascination with fibreglass and the ritualistic forms of their objects.

surprise me!

surprise me!

make your fridays matter

SUBSCRIBEEnter your details to sign in

Don’t have an account?

Sign upOr you can sign in with

a single account for all

STIR platforms

All your bookmarks will be available across all your devices.

Stay STIRred

Already have an account?

Sign inOr you can sign up with

Tap on things that interests you.

Select the Conversation Category you would like to watch

Please enter your details and click submit.

Enter the 6-digit code sent at

Verification link sent to check your inbox or spam folder to complete sign up process

by Anmol Ahuja | Published on : Oct 13, 2023

What do you think?